Big data. Cloud data. AI training data and personally identifying data. Data is all around you and is growing every day. It only makes sense that software engineering has evolved to include data engineering, a subdiscipline that focuses directly on the transportation, transformation, and storage of data.

Perhaps you’ve seen big data job postings and are intrigued by the prospect of handling petabyte-scale data. Maybe you’re curious about how generative adversarial networks create realistic images from underlying data. Maybe you’ve never even heard of data engineering but are interested in how developers handle the vast amounts of data necessary for most applications today.

No matter which category you fall into, this introductory article is for you. You’ll get a broad overview of the field, including what data engineering is and what kind of work it entails.

In this article, you’ll learn:

- What the current state of the data engineering field is

- How data engineering is used in the industry

- Who the various customers of data engineers are

- What is and what isn’t part of the data engineering field

- How to decide if you want to pursue data engineering as a discipline

To begin, you’ll answer one of the most pressing questions about the field: What do data engineers do, anyway?

What Do Data Engineers Do?

Data engineering is a very broad discipline that comes with multiple titles. In many organizations, it may not even have a specific title. Because of this, it’s probably best to first identify the goals of data engineering and then discuss what kind of work brings about the desired outcomes.

The ultimate goal of data engineering is to provide organized, consistent data flow to enable data-driven work, such as:

- Training machine learning models

- Doing exploratory data analysis

- Populating fields in an application with outside data

This data flow can be achieved in any number of ways, and the specific tool sets, techniques, and skills required will vary widely across teams, organizations, and desired outcomes. However, a common pattern is the data pipeline. This is a system that consists of independent programs that do various operations on incoming or collected data.

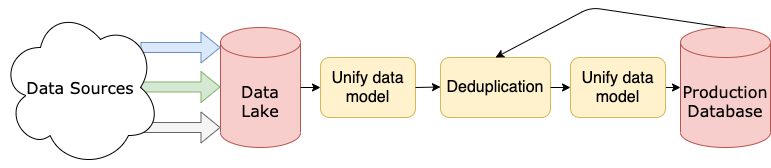

Data pipelines are often distributed across multiple servers:

This image is a simplified example data pipeline to give you a very basic idea of an architecture you may encounter. You’ll see a more complex representation further down.

The data can come from any source:

- Internet of Things devices

- Vehicle telemetry

- Real estate data feeds

- Normal user activity on a web application

- Any other collection or measurement tools you can think of

Depending on the nature of these sources, the incoming data will be processed in real-time streams or at some regular cadence in batches.

The pipeline that the data runs through is the responsibility of the data engineer. Data engineering teams are responsible for the design, construction, maintenance, extension, and often, the infrastructure that supports data pipelines. They may also be responsible for the incoming data or, more often, the data model and how that data is finally stored.

If you think about the data pipeline as a type of application, then data engineering starts to look like any other software engineering discipline.

Many teams are also moving toward building data platforms. In many organizations, it’s not enough to have just a single pipeline saving incoming data to an SQL database somewhere. Large organizations have multiple teams that need different levels of access to different kinds of data.

For example, artificial intelligence (AI) teams may need ways to label and split cleaned data. Business intelligence (BI) teams may need easy access to aggregate data and build data visualizations. Data science teams may need database-level access to properly explore the data.

If you’re familiar with web development, then you might find this structure similar to the Model-View-Controller (MVC) design pattern. With MVC, data engineers are responsible for the model, AI or BI teams work on the views, and all groups collaborate on the controller. Building data platforms that serve all these needs is becoming a major priority in organizations with diverse teams that rely on data access.

Now that you’ve seen some of what data engineers do and how intertwined they are with the customers they serve, it’ll be helpful to learn a bit more about those customers and what responsibilities data engineers have to them.

What Are the Responsibilities of Data Engineers?

The customers that rely on data engineers are as diverse as the skills and outputs of the data engineering teams themselves. No matter what field you pursue, your customers will always determine what problems you solve and how you solve them.

In this section, you’ll learn about a few common customers of data engineering teams through the lens of their data needs:

- Data science and AI teams

- Business intelligence or analytics teams

- Product teams

Before any of these teams can work effectively, certain needs have to be met. In particular, the data must be:

- Reliably routed into the wider system

- Normalized to a sensible data model

- Cleaned to fill in important gaps

- Made accessible to all relevant to members

These requirements are more fully detailed in the excellent article The AI Hierarchy of Needs by Monica Rogarty. As a data engineer, you’re responsible for addressing your customers’ data needs. However, you’ll use a variety of approaches to accommodate their individual workflows.

Data Flow

To do anything with data in a system, you must first ensure that it can flow into and through the system reliably. Inputs can be almost any type of data you can imagine, including:

- Live streams of JSON or XML data

- Batches of videos updated every hour

- Monthly blood-draw data

- Weekly batches of labeled images

- Telemetry from deployed sensors

Data engineers are often responsible for consuming this data, designing a system that can take this data as input from one or many sources, transform it, and then store it for their customers. These systems are often called ETL pipelines, which stands for extract, transform, and load.

The data flow responsibility mostly falls under the extract step. But the data engineer’s responsibility doesn’t stop at pulling data into the pipeline. They have to ensure that the pipeline is robust enough to stay up in the face of unexpected or malformed data, sources going offline, and fatal bugs. Uptime is very important, especially when you’re consuming live or time-sensitive data.

Your responsibility to maintain data flow will be pretty consistent no matter who your customer is. However, some customers can be more demanding than others, especially when the customer is an application that relies on data being updated in real time.

Data Normalization and Modeling

Data flowing into a system is great. However, at some point, the data need to conform to some kind of architectural standard. Normalizing data involves tasks that make the data more accessible to users. This includes but is not limited to the following steps:

- Removing duplicates (deduplication)

- Fixing conflicting data

- Conforming data to a specified data model

These processes may happen at different stages. For example, imagine you work in a large organization with data scientists and a BI team, both of whom rely on your data. You may store unstructured data in a data lake to be used by your data science customers for exploratory data analysis. You may also store the normalized data in a relational database or a more purpose-built data warehouse to be used by the BI team in its reports.

You may have more or fewer customer teams or perhaps an application that consumes your data. The image below shows a modified version of the previous pipeline example, highlighting the different stages at which certain teams may access the data:

In this image, you see a hypothetical data pipeline and the stages at which you’ll often find different customer teams working.

If your customer is a product team, then a well-architected data model is crucial. A thoughtful data model can be the difference between a slow, barely responsive application and one that runs as if it already knows what data the user wants to access. These sorts of decisions are often the result of a collaboration between product and data engineering teams.

Data normalization and modeling are usually part of the transform step of ETL, but they’re not the only ones in this category. Another common transformative step is data cleaning.

Data Cleaning

Data cleaning goes hand-in-hand with data normalization. Some even consider data normalization to be a subset of data cleaning. But while data normalization is mostly focused on making disparate data conform to some data model, data cleaning includes a number of actions that make the data more uniform and complete, including:

- Casting the same data to a single type (for example, forcing strings in an integer field to be integers)

- Ensuring dates are in the same format

- Filling in missing fields if possible

- Constraining values of a field to a specified range

- Removing corrupt or unusable data

Data cleaning can fit into the deduplication and unifying data model steps in the diagram above. In reality, though, each of those steps is very large and can comprise any number of stages and individual processes.

The specific actions you take to clean the data will be highly dependent on the inputs, data model, and desired outcomes. The importance of clean data, though, is constant:

- Data scientists need it to perform accurate analyses.

- Machine learning engineers need it to build accurate and generalizable models.

- Business intelligence teams need it to provide accurate reports and forecasts to the business.

- Product teams need it to ensure their product doesn’t crash or give faulty information to users.

The data-cleaning responsibility falls on many different shoulders and is dependent on the overall organization and its priorities. As a data engineer, you should strive to automate cleaning as much as possible and do regular spot checks on incoming and stored data. Your customer teams and leadership can provide insight on what constitutes clean data for their purposes.

Data Accessibility

Data accessibility doesn’t get as much attention as data normalization and cleaning, but it’s arguably one of the more important responsibilities of a customer-centric data engineering team.

Data accessibility refers to how easy the data is for customers to access and understand. This is something that is defined very differently depending on the customer:

- Data science teams may simply need data that’s accessible with some kind of query language.

- Analytics teams may prefer data grouped by some metric, accessible through either basic queries or a reporting interface.

- Product teams will often want data that is accessible through fast and straightforward queries that don’t change often, with an eye toward product performance and reliability.

Because larger organizations provide these teams and others with the same data, many have moved towards developing their own internal platforms for their disparate teams. A great mature example of this is the ride-hailing service Uber, which has shared many of the details of its impressive big data platform.

In fact, many data engineers are finding themselves becoming platform engineers, making clear the continued importance of data engineering skills to data-driven businesses. Because data accessibility is intimately tied to how data is stored, it’s a major component of the load step of ETL, which refers to how data is stored for later use.

Now that you’ve met some common data engineering customers and learned about their needs, it’s time to look more closely at what skills you can develop to help address those needs.

What Are Common Data Engineering Skills?

Data engineering skills are largely the same ones you need for software engineering. However, there are a few areas on which data engineers tend to have a greater focus. In this section, you’ll learn about several important skill sets:

- General programming concepts

- Databases

- Distributed systems and cloud engineering

Each of these will play a crucial role in making you a well-rounded data engineer.

General Programming Skills

Data engineering is a specialization of software engineering, so it makes sense that the fundamentals of software engineering are at the top of this list. As with other software engineering specializations, data engineers should understand design concepts such as DRY (don’t repeat yourself), object-oriented programming, data structures, and algorithms.

As in other specialties, there are also a few favored languages. As of this writing, the ones you see most often in data engineering job descriptions are Python, Scala, and Java. What makes these languages so popular?

Python is popular for several reasons. One of the biggest is its ubiquity. By many measures, Python is among the top three most popular programming languages in the world. For example, it ranked second in the November 2020 TIOBE Community Index and third in Stack Overflow’s 2020 Developer Survey.

It’s also widely used by machine learning and AI teams. Teams that work closely together often need to be able to communicate in the same language, and Python is still the lingua franca of the field.

Another, more targeted reason for Python’s popularity is its use in orchestration tools like Apache Airflow and the available libraries for popular tools like Apache Spark. If an organization uses tools like these, then it’s essential to know the languages they make use of.

Scala is also quite popular, and like Python, this is partially due to the popularity of tools that use it, especially Apache Spark. Scala is a functional language that runs on the Java Virtual Machine (JVM), making it able to be used seamlessly with Java.

Java isn’t quite as popular in data engineering, but you’ll still see it in quite a few job descriptions. This is partially because of its ubiquity in enterprise software stacks and partially because of its interoperability with Scala. With Scala being used for Apache Spark, it makes sense that some teams make use of Java as well.

In addition to general programming skills, a good familiarity with database technologies is essential.

Source : realpython.com